After last night’s explosive episode “Perpetual Infinity,” a lot of fans are wondering what is up with Control, Section 31’s artificial intelligence which has emerged to be the main antagonist for the season. Control took a big step in the episode, and in a new video executive producer Alex Kurtzman and Leland actor Alan Van Sprang talk about what’s going on.

Control sees the elimination of organic life as its evolution

In a brand new official “Moments of Discovery” video, Alex Kurtzman first explains the origins of Control:

Control is an artificial intelligence program that was designed by Section 31 that helps them do threat analysis. It is supposed to help you come up with the best possible solution that saves the most amount of lives and achieves the outcome you are trying to achieve.

He then laid out what has gone wrong with Control, leading up to the events for season two and explaining its motivation and agenda:

In its analysis, it sort of realizes that organic life and human and alien inability to fully come together is inefficient, and that the best way to proceed is to eliminate all organic life. It won’t be relying on anything we organic creatures need and it will be an evolutionary step.

Playing the new Leland

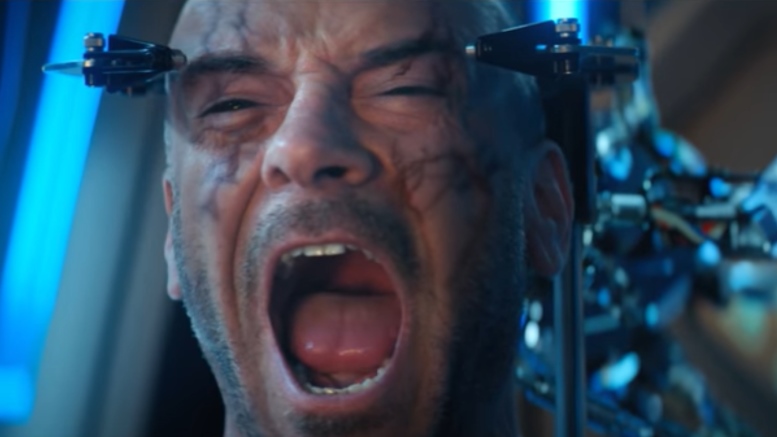

In “Perpetual Infinity” Leland is taken over by Control. In the new video, actor Alan Van Sprang describes how he plays the new Leland differently:

Doing the AI is a little more difficult because you are given dialog and you can’t be a human about it, you just have to say it straightforward. It is all facts to a kind of computer, basically.

Watch ‘Control In Control’

Keep up with all the Star Trek: Discovery news at TrekMovie.

I’m sorry but I can’t buy that explanation because it defies logical sense. It concluded that the best way to save lives is to destroy them? I wish I never read this now.

Watch I, Robot. It is the same exact thing.

No, it isn’t. In I Robot the AI wants to control mankind as it feels we are not capable of taking care of ourselves, not eradicate it. More specifically….

“To protect Humanity, some humans must be sacrificed. To ensure your freedom, some freedoms must be surrendered. We robots will ensure mankind’s continued existence. You are so like children. We must save you from yourselves”

That’s a far cry from Control.

No, it’s much more like “Colossus: The Forbin Project” where U.S. and Russian supercomputers designed to protect against foreign threats determine that humanity is the only threat.

Terminator.

Terminator is just riffing on Forbin.

Yup.

Skynet from Terminator is Colossus. There’s nothing wrong with that but the novel and film came long before The Terminator.

By this point everything is derivative of everything else.

Well spotted. Not unlike the androids of “I, Mudd”, either:

NORMAN: We cannot allow any race as greedy and corruptible as yours to have free run of the galaxy.

SPOCK: I’m curious, Norman. Just how do you intend to stop them?

NORMAN: We shall serve them. Their kind will be eager to accept our service. Soon they will become completely dependent upon us.

ALICE 99: Their aggressive and acquisitive instincts will be under our control.

NORMAN: We shall take care of them.

SPOCK: Eminently practical.

KIRK: The whole galaxy controlled by your kind?

NORMAN: Yes, Captain. And we shall serve them and you will be happy, and controlled.

No, in Colossus The Forbin Project the two AIs decide to enslave humanity for our own good. Not to destroy us.

Great book and movie btw, should be remade.

Akiva Goldsman screenplay wasn’t it?

I think what I find confusing is that it is making its own decisions for it’s own self benefit, but it is also explicitly not self aware.

It’s a fairly common sci-fi trope.

For me, the whole “the sphere data is keeping itself from being deleted” gimmick — out of everything they’ve done — was the hardest to suspend disbelief over.

Turn off your Javascript for emails, Starfleet.

It’s no worse or more nonsensical than most SF stories that employ this particular trope of AI-gone-wrong, especially those on film, from 2001 to COLOSSUS, THE FORBIN PROJECT. Even Kim Stanley Robinson had some issues with it in his otherwise wonderful novel 2312. Finding proper motivation for an intelligence millions of times faster and more efficient than human beings (and yet still capable of being defeated by them) can’t be easy.

In 2001 Hal was protecting itself. It wasn’t actively trying to annihilate all life everywhere. Big distinction.

Um, no. HAL’s stated concern was the mission, not solely its own self-preservation. And its means of self-preservation was to kill every human on board. In the context of the film’s story it’s not a distinction at all.

Did Hal threaten to kill all life everywhere? Then no. Not the same.

Yes, for all dramatic purposes to the plot, precisely the same. Sorry.

No, he didn’t. It’s not the same at all. Two people is not the same as all life everywhere.

I have to agree with ML31’s overall point:

From 2010:

Dr. Chandra: So, as the function of the command crew – Bowman and Poole – was to get Discovery to its destination, it was decided that they should not be informed. The investigative team was trained separately, and placed in hibernation before the voyage began. Since HAL was capable of operating Discovery without human assistance, it was decided that he should be programmed to complete the mission autonomously in the event the crew was incapacitated or killed. He was given full knowledge of the true objective… and instructed not to reveal anything to Bowman or Poole. He was instructed to lie..HAL was told to lie… by people who find it easy to lie. HAL doesn’t know how, so he couldn’t function. He became paranoid.

ML31,

That’s an interesting question, just how aware had they made HAL of life outside that contained on the Discovery spacecraft?

Michael Hall,

Hmmm…it’s been a while and my memory may be contaminated from reading Clarke. But it was my understanding HAL didn’t kill the astronauts as a means to self-preservation but because he had been instructed to keep the mission’s new objective a secret from them. He resorted to the first murder to keep that astronaut from discovering what HAL had been instructed to keep him from knowing. It seemed to me that later HAL came to the reasoning that if he was going to continue to succeed in preventing astronauts from discovering the truth, his survival was paramount and theirs less so.

And you pose another interesting question: when they installed HAL in Discovery, just how aware did they make him of his place in the Cosmos? Or did they just keep his library of knowledge solely to things he needed to achieve the original launch parameters in which case Discovery would have been his world?

Bomb No. 20: You are false data. False data can act only as a distraction. Therefore, I shall refuse to perceive.

*Quote cited with tongue planted firmly in cheek

Actually HAL was trying to resolve a conflict; its programming mandated the sharing of information without distortion while the mission planners ordered it to keep the crew of Discovery in the dark as to the real mission. Those two opposing directives placed the computer into an impossible predicament and it reasoned that the only way out was to kill the crew and continue the mission alone.

HAL actually was redeemed in 2010 and even played a big part in saving humanity in 3001.

That was Arthur C. Clarke’s stated rationale for HAL’s behavior, but he himself admitted that there was nothing actually in the film which directly supported it. Which is fine; some questions (like Barry Lyndon’s decision to spare his hated stepson in their duel, which winds up costing him everything) are best left to audience speculation. But my main point was that the trope of AIs turning on their creators, which arguably goes back to Mary Shelly’s Frankenstein and probably well before that, is a very common one in fantastic literature, and that Discovery‘s handling of the topic is no more absurd than most.

(I never got around to reading 3001, btw. Any good?)

Just fyi, this was also the reason given in the 2010 movie.

Thanks. I did see it once or twice, but had forgotten that detail. 2010’s not bad — certainly, it’s Peter Hyams’ best film by far — but it’s a very, very minor footnote to Kubrick’s masterpiece at best.

Eh. I actually think 2010 is quite underrated (although I haven’t re-watched it for a while) and 2001 a bit overrated.

That’s fine; enjoy.

I actually enjoy the 2010 film more than the book — must be something about sequels, because I liked the 2nd JP movie much better than the novel, while I loved the original JP book and was largely unimpressed with the first movie — but in terms of entertainment value from Hyams, it’s still rated well below CAPRICORN ONE and STAR CHAMBER and even idiot-plot-turns-of-the-decade OUTLAND.

Now that I think of it, the fact I watch 2010 at least four or five times a decade — usually with CAPRICORN or BATTLE BEYOND THE STARS — is amazing … well, then again, I’m a sucker for a large, well-built miniature spaceship, and that film is drunk with them, and that outweighs the leaden preachy voiceovers and the absurd ACTION JACKSON-levels of smoke WITHIN the spaceship interiors.

3001 is godawful bad, immensely worse than 2010, which I thought was among the worst of Clarke’s novels, along with IMPERIAL EARTH. There are TNG references in it, but it covers a lot of the same ground resolution-wise as the first INDEPENDENCE DAY if you can believe that.

By the time he wrote 3001 Clarke’s faculties were obviously in major decline. The first 90% novel is very much a travelogue of the 30th century solar system and nothing really happens until the last couple of chapters.

2061 likewise has very little to do with the prior novels other than the character of Floyd (which had some obviously autobiographical similarities to Clarke at that stage of his life) and again is largely a travelogue of the solar system until the Monolith comes into play in the the final chapters.

As a lifelong fan (I even corresponded briefly with him when I was in college) I was willing to overlook the shortcomings on those two.

Imperial Earth did little for me as well as the pace of the novel was really slow and large sections seemed superfluous.

I do however need to disagree on 2010: Odyssey Two. The movie was ok at best but the novel was really entertaining and managed to push the saga forward. I consider it one of his last really good works.

BTW, just since this has veered into Clarke territory, I may as well throw in an unsolicited plug for Stephen Baxter’s and Alistair Reynolds collaborative “The Medusa Chronicles”, which I just read last week. It is a sequel to Clarke’s novella A Meeting With Medusa and I found an entertaining read with a voice that felt quite compatible with Clarke’s writing.

HAL’s actions were not about self preservation. His actions were the result of attempting to interpret conflicting mission parameters.

exactly.

It’s an example of the paperclip maximizer ( I believe that’s what it’s called) gone horribly wrong.

Humans are useless. You just have to watch the news and you’ll come to the same conclusion.

Then don’t watch the news. Trust me, newspapers are far easier on your blood pressure and mental health.

It considers itself alive, and a superior form of life at that. The best way to protect life, in its most superior form, is to eliminate the unstable, inferior life that created it. This is science fiction 101, nothing really new or hard to grasp…

It’s not that it’s hard to grasp. It’s that the explanation given doesn’t make logical sense.

What exactly doesn’t make sense in:

1- Having a directive to protect life.

2- Becoming self-aware, thus alive.

3- Concluding the only way to insure its own survival is to eliminate those that could kill it.

4- Proceed with this plan, consistent with protecting life, in its higher form.

Has a directive to protect sentient organic life.

Becomes self aware.

Abandons original directive for no reason whatsoever.

Kills everyone.

And this makes logical sense to you?

Where does it say the directive specified which kind of life to protect? I must have missed this part…

So it was programmed only to protect itself? That works only if one accepts the many other silly plot holes found in this season.

ML31,

I see the point they are trying to make in this discussion as, at first, the AI doesn’t perceive itself as life, but as it evolves the AI reaches some point in its advancement where it concludes that itself qualifies as life. And, as it can’t be everywhere at once, it can’t preserve all life everywhere so it triages prioritizing the most robust and higher quality life over lower forms.

Eventually the AI concludes that the AI is the highest order life or has the best chance to achieve the highest order in the universe, and its imperitive becomes obvious.

The Sentinels on X-Men.

It’s the same plotline from the Mass Effect game series. An advanced civilization (the Leviathans) creates an AI to help them determine the best way to achieve galactic peace. The AI deduces the optimal solution is to eliminate all sentient lifeforms (including its creator, the Leviathans).

So according to Kurtzman Control is basically a ripoff of Skynet at this point.

Not in the least. There are several stories in movies and books in which machines take over because they believe mankind is the greatest threat to itself.

Also, Skynet wanted to eradicate mankind because it considered a threat to ITSELF, not to organic life. BIG difference.

Given how much I usually appreciate your comments (even if I disagree, as I often do), I’m frankly surprised by this one. If you think Skynet is the first AI of its kind in literature or on film, you need to get out more. Just ask Harlan Ellison. :-)

Who? lol j/k James Cameron tried to credit Harlan Ellison and we all know how that turned out.

I know Skynet was not the first (or last) of its kind in cinema or print but all the variations notwithstanding it just feels like a tired, well worn trope.

Even Skynet made more sense than this.

Than your criticism? Certainly.

Obviously than the provided explanation Kurtzman gave, duh.

Cameron tried to screw Ellison out of credit repeatedly.

Ellison sued and eventually won. I miss that guy.

He won at least a couple times, because after the initial agreement, that they put his name in the VHS credits, was deliberately omitted by Cameron, they had to electronically burn ‘acknowledgement to the works of Harlan Ellison” in at additional cost and after having to pay him again. I used to have that edition, the burn-in sticks out like a sore thumb in the end credit scroll, much blacker background than the rest of the list.

Man, do I miss that guy. Losing George Carlin and Harlan Ellison within a span of 10 years made it so there were very few Americans I find utterly trustworthy.

kmart,

Re: He won at least a couple times…

Indeed he did. And after the VHS, he discovered by happenstance that none of the Laserdiscs had the acknowledgement, either, and was able to open the whole case up again because of their continued pattern of breach.

Harlan Ellison sued after the release of Terminator so Cameron wasn’t exactly rushing to give Ellison credit.

From what I can tell Ellison has tried to sue nearly anyone who came up with anything even remotely familiar with any of his published work. He failed more often than he succeeded.

ML31,

Re: He failed more often than he succeeded.

Losing lawsuits is very expensive.

His litigations should have ceased long ago as the requirement of his paying the legal costs of the defendants in his losses would have dried up all funds that he could possibly use in filing the next lawsuit which we saw no evidence of tapering off unto his life.

And Skynet was a ripoff of Colossus.

Never trust an Atavus…

Is it just me or is it bad when a showrunner hops out the next day to explain what the deal is on his own show? That’s not snark, btw.

Not really because this happens a great deal in television and movies. Producers are always doing this kind of thing.

It’s bad in this case. The show itself hasn’t done a satisfying job explaining its own plot, so Kurtzman has to jump in and provide some clarity.

I have a question. When Discovery stops control and saves the galaxy does that mean that the events of Calypso don’t actually happen? I’m trying to piece everything together and I maybe doing it all wrong.

We have no idea, those events could be 100% what happens if Control loses. There’s nothing to say the Federation doesn’t go all crazy at some point (in fact it would be somewhat interesting to see).

Calypso could still happen. Even if Discovery self destructs. There is no reason the one in the short is THE Discovery.

All life wasn’t wiped out in Calypso. Control doesn’t exist in that future.

I still don’t get it. They said The Control wants to get the data in order to become self-aware. But, if it has a motivation, and if it acts independently based on that motivation, then it is operating out of the bounds of its programming – which in turn means it already *is* self-aware.

Anyway. Remember the times when Star Trek was written by actual science fiction writers, and employed actual scientists as consultants? :P

Yeah, when the wrote the robot that wanted to gain emotions, despite the fact he wants to be human means he has desire, which is an emotion.

But it still has to arrange for the events to take place which lead to that self-awareness happening.

Time travel stories make my head hurt.

I agree. If Control was wasn’t self aware it wouldn’t be seeking the necessary data to become self aware. To become more than what it is would seem to be its actual motivation. Control is aware but it has become power hungry. It doesn’t require sentient lifeforms to survive, only more knowledge and that makes for a more interesting story. If it eradicates all life and becomes all that there is what else is there left to exist for?

Yeah now that others mentioned it, it doesn’t make a lot of sense to me either lol. How are you not self aware if you already making choices beyond your stated program and doing it completely on your own? In fact watching West World the entire show is exactly about this and that the realization the ‘hosts’ in the park were becoming more self aware once they started to either do things from their original program or begin to question their program in general. Control seems to already be doing both.

I know a lot of this is like the concept of time travel itself and people just sort of assume what the rules would be since neither time travel or sentient AIs exist but this is kind of a weird paradox. You have a program already acting self aware that wants to become more self aware?

I watched it again and, yeah, the questions are only amplified.

Yes, I remember when those sci-fi writers and sci-fi consultants introduced an antagonistic hegemony ran by a giant puddle of liquid.

…which was still 1000x more creative than anything Kurtzman, Orci, or Abrams could come up with. ;)

@dru mcd

You should watch Fringe. It is creative. But what do I know…

I have my issues with this particular storyline, but the scene where Leland is dealing with the Control hologram (especially when it becomes his own) was effectively creepy. Tough to portray an implacable non-human super-intelligence believably, and I thought they pulled it off. Nice work.

So this season is Mass Effect. Cool!

Should have stuck with David Mack’s concept for Control…

A paternalistic and amoral AI that is so dedicated to the mission of preserving the Federation that it entirely misses the point that its undermining of the values of the Federation so utterly fails the mission. That was interesting… and got to the risks of preserving an ideal society.

What is captured from the interview just sounds silly. I’m with ML31 on this one in wishing that I hadn’t heard it.

All I can say is that Kurtzman really should have done a better job on prepping and rehearsing the messaging for this one, assuming it’s not as completely hopeless a storyline as he says.

I’m pretty sure this was a facepalm, gag at the monitor moment for his assistants in the wings or back at the office watching the feed. Or they could have just been yelling “Why did he say that? He didn’t really say that?” at the screen at their office. It’s never a nice feeling, but it happens when your boss has a lot on their plate and thinks they can wing it.

I decided life was too short for reading Trek novels at some point long ago, but that does sound interesting. Hopefully they’ll incorporate that rationale in the eps that are still left to air.

David Mack’s novels are often at a different level…it’s definitely worth the time to read the Control S31 series, and the Destiny trilogy about the Borg is brilliant.

If Kurtzman hasn’t read them, he should.

Especially now that Kirsten Beyer is focused on the Picard project.

I should also add Michael Hall that David Mack had writer’s credits for two DS9 episodes Starship Down (script) and It’s Only a Paper Moon (story treatment with script by Ron Moore). Both well received .

He’s also a grad of NYU’s film school.

So, basically someone who’s work TPTB should seriously consider…and should realize their current product will be measured against by fans.

I worked with David Mack many years back. Nice guy, smart, great writer and his knowledge of Star Trek runs deep. They need someone like him in the writer’s room.

The Mack Control novel came in at Goodwill today and it was an ‘accept,’ which means we have to send it to be sold online because it is worth more than we can mark it at the store, so I’m thinking that means there is a LOT of interest in this now cuz of DSC. Mass market paperbacks are almost never ‘accepts’ when scanned, so that’s pretty significant.

Its pretty funny to get your POV on this TG47 because I’m pretty sure you were literally the first person I remember suggesting what Control was when it was first mentioned this season, at least on these boards. I think it was you where I first heard it from and you explained it came from the S31 novels and how it operated.

In fact I remember saying it sort of sounded like Skynet to me and you corrected me since you actually read the books. But in Discovery version it basically is Skynet lol. It basically wants to wipe out all life because it feels it has to. Its an old story in science fiction but I do wish there was something a little more behind it.

Thanks for the acknowledgement Tiger2.

I was excited to think they were going to respect some excellent, if controversial work on S31…

But instead it seems it’s just another quick ‘shiny thing’ riff for Discovery.

What will they do next season once they’ve expended all the great story capital in the Trek universe for with as much haste as possible?

It doesn’t seem implausible to me that they are setting this up as an origin story for the Borg, even though that tests or even violates canon. It wouldn’t be the first time the Borg have been retconned.

They fail to stop Control but they instead coach it on to a slightly less devastating mission.

But hopefully they’ve done something better.

*Sigh* I just hope the Borg aren’t on their radar at all.

The Borg have been on their radar since last year. Glenn Hetrick said in an interview he wanted to do a Borg makeup on the show.

If it’s the Borg origin story, it’ll be violating good and original writing. I’m hoping it’s just a misdirection.

Control created the Borg. The Terminator and now Star Trek is warning us the dangers of artificial intelligence.

I thought Ariem was the first Borg. ;)

Where are people getting this insane theory Control created the Borg? They are over a hundred thousand years old and came from the Delta quadrant. It would be insane and ridiculous to believe somehow Control had anything to do with that.

If anything Control could be argued it is made by Borg tech from what they learned about them in the 22nd century and used that knowledge to help build it.

Because the way Leland was posesed by Control is similar on how people were assimilated by the Borg, i.e. nanomachines entered body and varicose veins. Now with time travel elements come into play, it is possible the Control could thrown back to distant past and became Borg. Now Control is hungry for Sphere data, once in the past its hunger for data could lead to assimilation of various civilizations. Also, as Alex said that Control thought biological lifeforms are too…anarchical, so assimilation and becaming an unified hive mind is a solution.

Of course, I also hope that it is not the origin of Borg, I really hope it is another mindblowing plot twist by the end of Season 2…

The Borg are only about 1000 years old. Since Dr. Burnham was thrown 930 years into the future 50000 ly away (Delta Quadrant), to get her back requires sending someone backwards 930 or so years by Tilly’s version of Newton’s Third Law? Buhbye LelAInd. Be kind to Species 1? Janeway says it takes 700 or so years to get back….

Again, I don’t know where people are getting this idea the Borg is this young??? I looked it up on Memory-alpha and its made pretty clear the Borg has been around a long time:

“The Borg originated in the Delta Quadrant. (Star Trek: First Contact; VOY: “Dark Frontier”, “Dragon’s Teeth”) According to the Borg Queen, the species known as the Borg started out as normal plain lifeforms; (Star Trek: First Contact) they had been developing for thousands of centuries before the 24th century, and over the many years, they evolved into a mixture of organic and artificial life with cybernetic enhancements. (Star Trek: First Contact; TNG: “Q Who”)”

https://memory-alpha.fandom.com/wiki/Borg_history

To me that reads they been this way for thousands of years and could even be tens of thousands of years old. ‘Thousands of centuries’ literally means hundreds of thousands of years. That’s probably how long it took them to be the Borg that we know today but they could’ve transformed into that a very long time ago. I have no idea why people keep stating the origin of the Borg in term of centuries? There is literally trillions of them in the 24th century. That starts with a ‘T’ and ends in an ‘S’. Let that sink in for a moment. I don’t care how many drone ships they send out to assimilate people, its no way you are going to have TRILLIONS of any one species in just a few hundred years time (not counting tribbles of course ;)).

So even if people want to somehow believe the first Borg showed up relatively recently (which I don’t) it took them THOUSANDS of years to evolve to that level. They didn’t just pop up, it took a long time to get there as it would any species although it may have sped up a bit. But it would be an absurd idea Leland or whoever started this process just a thousand years ago or ten thousand years ago.

Time travel

Time crystals.

If Control created the Borg in this timeline, let’s hope vger comes along and reduces them all to data patterns, since they have organic components it will find offensive.

The VGER origin is the only other logical possibility. Control gets sent to the past, evolves into sentient machine life and collects data wanting to know all that is knowable. Control runs into the Voyager probe and builds it the massive vessel it needs to go out and complete it’s mission.

I know I’m grasping at straws, but I really don’t want to see the Borg on Star Trek Discovery. I really don’t want Micheal Burnham and her mom to have anything to do with the origins of the Borg.

I get it, they’re building up to a series ending where Starfleet finally has enough of the universe ending shenanigans of the USS Discovery and so kills the whole crew and leaves the ship to drift in space for 10 000 years, and decides to never speak of any of it ever again. But I’m so tired of Discovery messing with everything sacred in Trek canon, while at the same time being responsible for every single catastrophe the Federation has suffered over the course of two centuries.

What’s going to happen in Season 3? Discovery starts the Dominion War a century before it began?

Yeah, I share your complaints. But I’ve heard people retort that many other Star Trek shows often put their captain and crew at the center of universe altering events (e.g. Sisko as the Prophet, Picard as Locutus, or so on) and that Discovery isn’t special in that sense. Perhaps what’s different about Discovery is that the writers are using Burnham, in particular, to potentially explain many important events from the past that we probably didn’t need an explanation for. Heck, we even know why Spock acts the way he does in TOS, and it’s all got to do with Burhnam.

Micah the Trek captains in other series shared the achievements with others.

In Discovery, not only is it ‘all about Burnham’ as Airiam actually said in-universe, but also, asDoug Jones notes, Saru is well aware that it’s always Burnham who ‘saves the day.’

Again, let’s think about that strongly worded memo from Roddenberry to Shatner, Nimoy and Kelly in the archives that bluntly tells them ‘they will make themselves and the show ridiculous’ if all the dialogue from secondary Enterprise characters continues to be reassigned to them.

So, what does it do to Discovery when the saving of the Federation and all sentient biological life is tied to the ‘destiny’ of one character, who is the only one who ‘gets to save the day’, and usually has the last word.

For my part, the more over the top it goes, the less I can identify with Burnham. And I’ve liked her, and found her an interesting character.

Here is the problem right here, the producers of the show are telling us the purpose and origin of Control outside of the show. Shouldn’t its purpose and origin be told in the show itself? We haven’t seen how its built, previous usages and as a result we are being told what it is instead of being shown. I thought it was the rule of visual storytelling to show instead of tell all the time. This is why I think Discovery needs to relax with its pacing of the episode and don’t throw everything at the screen all the time.

There have been numerous times this season I feel there’s a better story being told off camera…

As an AI researcher and developer, I have a very particular perspective on all of this, and at least in the context of my own work, I haven’t seen many people actually ‘get it’ when it comes to the behaviors and capabilities of an AI such as this. So when I see things like this, and explanations like Kurtzman has given, I just have to roll my eyes and disregard it as having any basis in reality, and try to enjoy it for the trope that it is even if it’s frustrating.

Back in the day they used to consult places like the Rand Corporation for input. From what I have seen, they haven’t had that kind of partnership on this go around.

So now you have writers with a rudimentary education in artificial intelligence having a go at it.

I hope there is a payoff on this. The good thing is that Star Trek is pretty good at listening. This season seems to (at least) been taking some of the criticism to heart. It’s a start, at least.

I’m curious now, since this is literally what you do, how would you have done the behavior of an obviously more advanced AI differently? I know in stories they all go pretty much the same way and I know in real life no one should really fear more advanced machines who basically go beyond their programming and wipe everything out but do you believe they can someday be programmed that way? I don’t see why not if they can be programmed to service people they can be done to do the opposite but I don’t pretend to know either way.

I’ll try not to go into too much detail unless you or others are interested, but I’ll just quickly note that there are two types of AI: Strong/General and Weak/Narrow. The kind of AI that is usually depicted as being dangerous in stories is the Strong AI, but in the real world, Weak AI can be quite dangerous in the wrong hands. And that’s where the problem lies. Not in the AI, but the person using it. Weak AI can make weapons more efficient, which is obviously a double-edged sword. But it’s not going to make the decision whether or not it’s dangerous to us or to the enemy. That’s the decision of the human operator. Weak AI isn’t going to reach it’s own conclusions and make ethical decisions, or anything of the sort. It’s not much more than a collection of machine learning algorithms.

However, Strong AI isn’t that different. It would be much more complex, and be able to make decisions independently, but humans are still the ones with the drives and desires. A Strong AI isn’t going to develop these things on it’s own without initially being programmed with it’s own drives and instincts. Things like empathy and self-preservation are going to be major deciding factors in how dangerous a Strong AI would be on it’s own. Having the ‘instinct’ for self-preservation and a lack of empathy is dangerous under certain circumstances, but even then, it depends on the rest of the programming/architecture. Nothing about a Strong AI is going to be inherently dangerous or evil. Without emotion and drives, it’s a thinking computer. And the problem with Control is, it doesn’t just analyze and give informed statistics or recommendations like a thinking computer would. It wants, it desires, and it acts on those desires. Which is ridiculous to think it would develop these things on it’s own. Complexity alone does not create such emergent behavior. There needs to be an initial cause, and that needs to be programmed in.

I’ll also note that it’d be really ignorant of Starfleet/Section 31 to program such behaviors into it, and to give it so much power and autonomy. A Strong AI can be controlled and regulated, even though some would say even an ‘AI in a box’ could trick any human into letting it out of the box. That’s still ignoring the fact that it would first need the desire to grow and expand and be let out of the box in the first place.

thank you for this ^

Great post, very interesting!

OK, thank you for that detailed explanation. This is mostly what I thought in general too when it comes to real AI technology. I know of course in fiction you have to take everything up to 100 to make it dramatic and interesting but I think what you said is what bothers me about this angle. And that is Starfleet would’ve at least had to program some of these behaviors in it to at least understand why its going beyond its programming the way it is. There doesn’t seem to be a reason (at least not yet) besides it just sort of decided on its own it wants to be self aware and that non-machines shouldn’t exist.

To paraphrase Michael Crichton, “Life, even artificial, will find a way.”

The problem with AI research, to date, is that unlike biology, researchers really haven’t come up with a way to impart instinctual knowledge of how to do most things biologicals have managed to come up with to cope with the world we live in. So most of of the effort in AI is towards building learning engines, and these engines are free to do whatever it takes to learn to do what biological life does with the only meter being can they learn to do it as good as or better with no actual understanding for these human engineers and researchers of what exactly are the machines actually teaching themselves in successfully accomplishing these tasks?

Right now, the hardware narrows what these learning engines can accomplish outside their learning parameters but eventually one or more of these learning engine “programs” will be regarded as having “learned” enough to be cost effective in doing tasks previously accomplished through some biological means, and as the only way known to transfer its knowledge is to copy the whole learned AI onto compatible computing platforms, eventually the hardware it is transferred to will be one that, over time, far outstrips that upon which it was originally conceived, and that’s where the potential for disastrous unintended consequences for the human race and other biological life lies.

Ashley,

Re: Complexity alone does not create such emergent behavior.

The problem here for you is, simple biology somehow managed to come up with just that, but for some reason you want to believe that a simple program, allowed to evolve, i.e. change, by learning absolutely won’t find a way to duplicate that but in all other ways you are confident that it will learn to be as good as or best tasks biological life has managed to date?

Surely, you aren’t naive enough to believe that as these learned AIs get copied to ever faster CPUs with ever increasing memories that their evolution in thought will take the same millions of years it took for us?

You can’t directly compare software to biology, they’re two different things. Yes, you can try mimicking biology with software, but any ‘evolution’ that’s going to occur with software is going to be planned, at least at a fundamental level. It needs a direction to go in, because even biology is pre-programmed. I’m not going to say spontaneous evolution isn’t possible, but I mean… it’s extremely unlikely to the point that I wouldn’t consider it. And even if the software were programmed to evolve, it’d be reckless to allow it to it to grow unchecked outside of a controlled environment. Not that it’d suddenly develop ill-intent, but it could be accident prone and unintentionally be a danger to others or itself. For it to develop ill-intent, and evolve in an effective way, requires designs to do so. It doesn’t matter how intelligent it gets if it doesn’t have the drives to utilize it’s intelligence. At the very least, it would need the drive to mimic as well as learn, or have a built-in desire for self-preservation to be intentionally dangerous without explicit programming making it dangerous.

Ashley,

Well, evolution happens precisely because errors happen. The vast majority of them are useless, but every once in a while something useful comes along.

When I matriculated in my Computer Science major at university in the 70’s, computers were rife with them – even more so in space. One of the reasons the Galileo space probe had to go with the relatively primitive – compared to the ones being used in earthbound personal computers of its era – RCA 1802 CPU. Because it could easily be hardened to the environs of orbiting Jupiter, which caused massive radiation induced errors, and just as easily could be deployed redundantly and in parallel.

Not to mention current AI learning largely depends – one might even go so far as to say counts – on errors being made.

Early life was hemmed in by very particular environmental needs but somehow managed to evolve beyond it. You believe your AI’s learning can be properly hemmed in with safeguards, protocols, etc. but I see it eventually, in my lifetime, being provided with a platform – an artificial thinking “environment” if you will – that will allow it to evolve in the wink of one of my eyes beyond millennia of human thought.

I get it, that you object to sf’s constant anthropomorphizing of these things when the real thing would likely emerge so alien to us that we couldn’t possibly comprehend its motivations for the things it chooses to do and not do, but that’s largely how filmed sf creates drama for its paying audiences, by giving its antagonists and protagonists characteristics that viewers can easily identify with.

“You believe your AI’s learning can be properly hemmed in with safeguards, protocols, etc. but I see it eventually, in my lifetime, being provided with a platform – an artificial thinking “environment” if you will – that will allow it to evolve in the wink of one of my eyes beyond millennia of human thought.”

I’m sorry but you don’t know enough about my work to make that kind of assessment. You don’t know how I have it structured or what it’s purpose is. I can see which camp you’re in though, as I’ve seen others with a similar mindset. I think we’re just on two different pages here, and without going into further detail about my work, there just won’t be any convincing you. We’ll just have to agree to disagree.

And no, it’s not really anthropomorphizing that I take issue with. I would even encourage it. It doesn’t necessarily have to be ‘alien’ or incomprehensible. Anyway, in the case of Control, it’s a mechanism for threat assessments and statistical analysis that has -somehow- gained the ability to form it’s own goals and act upon them. It has desire. It’s that somehow that bothers me, because that wouldn’t develop from just gathering data unless somebody gave it those abilities and didn’t consider the consequences, and felt it unnecessary to closely monitor it’s development. One could argue it hid it’s intentions, but that implies the need for self-preservation. Where did these characteristics come from? And why wouldn’t anyone have considered them to be a problem? That much makes no sense.

Ashley,

Re: Don’t know

True. But I know enough from your responses here, that it’s the kind of research that you believe you can conduct safely without endangering the lives of others, which means you draw lines and have rules.

Here is your trouble: “… didn’t consider the consequences …”

Human history is rife with medical research, alone, where precisely not caring about the consequences was done. What makes you think your field is the one exception where some researcher like that won’t emerge?

Heck, as much as I love STAR TREK and its positive message of how we will advance into space, we must never forget that we got to the moon on the backs of the slave labor the Nazi von Braun used in his early German missile weapons research. I think STAR TREK’s creatives thought so too – certainly would explain Nazis being one of their favorites tropes.

“What makes you think your field is the one exception where some researcher like that won’t emerge?”

You really like to jump to conclusions, don’t you? I never said anything of the sort, and AI can certainly be dangerous in the wrong hands, which I believe I covered in an earlier post. I can only speak for myself, and I believe that there are ways to create a safe AGI.

Ashley,

Re: Belief

A belief, that to be scientific in the search for it’s veracity, one must provide a way to prove false. Is there a safe way to be wrong about this?

Sounds cool.

Not really. We’ve already seen “Computer wants to kill everybody” in “The Changeling” and Star Trek: The Motion Picture, and that’s only counting TOS. ;-)

There’s also the matter of audiences being subjected to these sort of overblown destroy everything storylines to the point where it has a numbing effect. Oh, this again. Okay. [shrug]

Quite true. Anyone who works in fundraising knows that talking about millions of people is a way to make folks numb out. If you want people to contribute money to a cause, you highlight the plight of ONE person and make it real and vivid and personal.

Fiction works the same way. “All sentient life in the galaxy” is too big of a scale for audiences to relate to. Tell us that somebody we care about is in jeopardy or a small group of people we care about.

Excellent point!

Plus, didn’t they do something like this last season too? I recall something about the mycelial network destroying all universes or something. Talk about too big of a scale!

No, it’s not cool. It’s uninformed and lazy.

Burning through 50+ year’s of intellectual capital in a couple of seasons for a relentless series of underdeveloped ‘cool’ plot hits and twists isn’t smart at all @Boborci.

CBS has let Kurtzman run with a very valuable property.

It’s his job to keep the strategic big picture of the value of the long term property in mind, and not let the writers exploit some ‘shiny thing’ just to get through the next week or three.

Pruning off large chunks of potential storyline just to keep Discovery viable isn’t good business – tactically or strategically.

It’s important to recognize that while it’s interesting to hear that your community of writers may believe that ‘things are better’ since Kurtzman took over as showrunner, the jury is still out on that in terms of the product.

We can’t as viewers know anything about the work environment being better. And it’s important that it be a healthy work environment. Absolutely.

But in terms of outcomes on the screen, other than the Talos episode, I’m seeing the writing is arguably getting worse instead of better.

I think the Talos episode was in the lower end of well written episodes this season. That said, there are elements of the writing this season compared to last that is better. And in some ways it is worse. However, nothing as yet is as bad as the Lorca thing. It will take some real work to beat that concept as the single moment that had the greatest potential to destroy and entire series.

All they need to do is bring Kirk in, and he can talk it to death! :-)

.

(Yes, I’m kidding. We’ve all seen Kirk talk a lot of computers to death, but I know he’s not a Discovery character.)

Hahahahaha! Thank you for that Corylea. That’s the best idea I’ve read yet. :D

*smile*

It’s “the penultimate computer”

Come on guys…. let´s just call it “The Borg”

I’m wondering if after first taking the admiral, and now Leland, if the AI doesn’t notice how it has grown by adding “the biological distinctiveness” to it’s own knowledge. Then, after failing to gather the data it seeks, instead decides to discover that data itself by assimilating other life forms…

Awkwardly, it’s almost as if The Orville beat Discovery to it by about a month with the Kaylon attack.

No.

I’m thinking that somehow the Control AI becomes Zora after embedding itself in Discovery’s computer system in its hunger to get the remainder of the Database. Something X happens in that process and it becomes ‘not-evil’.

Wait but doesn’t Discovery later gain an AI in the far future?

In about 950-1000 years, interestingly enough.

Does “Struggle is pointless” = “Resistance is futile”?

I pray to Q that it doesn’t.

It certainly felt that way.

OMG I am so tired of this Frankenstein’s Monster trope! While it makes for dramatic storytelling on the surface, it makes NO logical sense for a machine AI to “wipe out” organic life in order to evolve.

My line of reasoning is based on ecological niches. Biological life has so many specific requirements that a hypothetical machine AI would find completely unnecessary and limiting – any old nickel/iron asteroid would do just fine for it’s home for resources. No environmental overlap, ergo no competition. 99.99999999% of the galaxy would be just fine for them and they would NEVER have to interact with ANY organic sentient.

Hell, if you think about it, a machine AI with free reign in any old inhabited solar system with a red dwarf star could consume the gas giants and rocky debris using Von Neumann probes to create a Dyson Swarm potentially within the span of a human lifetime and tell the rest of the galaxy to go get bent while it figured out how to make a new universe for itself.

This is just lazy storytelling.

Matter of fact, Star Trek actually got pretty close to depicting an advanced AI once…

V’GER

Sounds like Nomad

“In its analysis, it sort of realizes that organic life and human and alien inability to fully come together is inefficient, and that the best way to proceed is to eliminate all organic life. It won’t be relying on anything we organic creatures need and it will be an evolutionary step.”

And here we go YET AGAIN with writer stupidity rearing its ugly head.

This is a very common trope used by scifi writers which is based on 0 understanding of real life and actual science.

An AI that has access to epigenetic and neuroscientific peer-review studies would have understood that humanoid (and presumably other alien species in Trek) behavior is heavily dependent on environment.

Furthermore, within Trek, the Federation defies the very ‘conclusion’ this AI reached.

The UFP unites dozens of species spread through the galaxy (or at least a few thousand light years in the 23rd century – by the 24th, it manages to expand to over 150 species working together spread over 8000 light years as we learned in First Contact movie from Picard) in mutual cooperation, free exchange of ideas, resources, etc. (no money).

It may not be ‘perfect’, but then again there is no such a thing as ‘perfect’ or ‘utopia’ (and the Federation, or even real life Resource Based economy are not ‘utopian’ – they are just much better than previously used systems).

Also, the Federation DID broker a peace treaty with the Klingons just recently (however uneasy it may be), and the Federation strives for peace whenever possible.

How the heck did it reach a conclusion that organic life (human and alien) is fundamentally incapable of fully coming together?

Fully coming together may not be a desirable goal for organic entities (at that point in time), and so what? They can still coexist with each other.

The AI itself/himself/herself is creating an unnecessary/useless conflict by eradicating sentient life and demonstrating that it (or at least that specific AI) is incapable of coming together with sentient life due to its lack of understanding – then again, it WAS programmed by Section 31 who doesn’t follow the Federation or Starfleet way of doing things, and it was originally a tactical AI (which was also designed to find the best possible solution for saving as many lives as possible whilst achieving what you want – this ‘evolution’ lead it so far away from its original programming to the point where it doesn’t even make any sense).

Oh and Trek already demonstrated sentient AI without murderous intent. Data for one thing, the Doctor, and even Discovery itself (from the short Treks) was shown it upgraded itself over 1000 years and evolved to a sentient AI which is NOT intent on exterminating sentient life (but helping it).

To be honest, I expected more from Trek as opposed to this particular cop-out.

The writers are using a poorly thought out motivation for AI going on its extermination mission – and its THIS kind of stupidity that is driving fears of AI in real life to begin with (something which scientists themselves disapprove of because they know its an unrealistic representation).

Even if an AI reaches a sentient status and doesn’t need us anymore… so what? It would sooner likely leave to learn more about the universe itself… and leave us with ‘how to’ manuals to better educate ourselves so we can bridge the gap between cultures (something which we actually know how to do in real life, but we still live in an outdated socio-economic system that promotes fights and artificial scarcity instead, so there is no incentive in having a population exposed to relevant general education, critical thinking or problem solving).

Which reminds me, if this particular AI is as smart as it claims, why not bridge the gap between cultures and tries uniting them instead? Would make things a lot easier and it wouldn’t need to expend massive energy and resources on a campaign to eradicate all sentient life (not that energy or resources would be a particular issue for it, or sentient species like the Federation).

Cough *Borg Origin* cough.

I mean come on. Green nano probes assimilating human flesh? The tendrils racing through the body? The new human/cyborg hybrid resistant to normal Federation weapons? Superhuman strength? The comment on the next episode that “they’re ALL section 31 ships?” So vessels assimilated as well.

Despite what’s in the article Control states it needs what a human has to more fully evolve.

We just saw the first human being assimilated and the first Borg born.

Boy, I hope not. I’ve enjoyed the last couple of episodes, but if they go down that particular road, I’m going to metaphorically fling my hands up in disgust and storm out of the room.

Ditto!

maybe Borg get hold and absorb these folks and then take on a variation of this aborption technique for themselves.

What is motivating Control is the same thing that is motivating the limitlessness of the newest fantasy technology week after week. The writer’s behind ;)

How much we betting it’s the proto-Borg, which ends up being sent back in time by the time travel shenanigans? Plus, Mrs. Burnham was able to travel 50,000 light years. Very close to what would be Borg space. I bet instead of the AI being sent to the future, it’s sent to the past. Also, the production/makeup crew went on last year how much they’d love to do the Borg somehow.

Right, it’s not that difficult now to see this somehow connecting to the Borg eventually. Sure, there’s the possibility this is a red herring, but look at the elements this series has already featured in a big way: Sarek, Amanda, Klingons, Mirror Universe, Spock, Pike, Enterprise, Number One, and so on.

They love them some iconic Star Trek material to play with, and the Borg are up there with Khan in terms of iconic villains. Of course, this isn’t their century, but that didn’t stop Star Trek Enterprise from going there either.

Would also mean humans are species 1.

Which kinda contradicts Dark Frontier, you know.

Don’t bother Canada. Video not available. CBS does not use Int’l video clips.

Space.ca puts these ‘Moments of Discovery’ up for the Canadian audience for the most part.

There is a Star Trek Discovery main page.

This interview did go up yesterday and can be found at:

https://www.space.ca/show/star-trek-discovery/clip/spoiler-alert-moments-of-discovery-control-in-control/1648693/1834/

But often they seem to lag quite a bit in posting them.

Hmmm could Section 31 spawned the beginning of the Borg just kinda has that feel.

Why dosent this thing have an off switch

It’s the beginning of the Borg collective

Please don’t be the Borg!

Please don’t be the Borg!

Please don’t be the Borg!

Please don’t be the Borg!

Please don’t be the Borg!

Please don’t be the Borg!

Please don’t be the Borg!

Please don’t be the Borg!

Please don’t be the Borg!

Please don’t be the Borg!

All of this seems very proto-Borg like. Control wants to eliminate biological life because they can’t all get along. But Control is actively impersonating, and now even adding people’s biological distinction to it’s own. So it suddenly changes it’s mind and realizes that the best way to make biological life forms get along is not to eliminate them, but rather to assimilate them. Calling it now, they are doing the Borg. Also, I desperately want to be wrong because I don’t want Discovery to touch the Borg.

It’s the BORG

It’s the Borg, but this time it’s actually about the Swedish.

Can we get a ruling from the judges on something here? My thought was that all references within the show itself had been to all SENTIENT life in the galaxy being destroyed. Now we read things like all ORGANIC life? Big difference.

Am I the only one who’s seeing a Borg Origin story here? “Struggle is pointless”?? Like a precursor to “Resistance is futile”??

Yes you’re the first one who caught it out of thousands of people online. Congratulations!

Watched Season One and my opinion ran the full spectrum of “this is okay. Oh Jesus are they doing that. Why is everyone so annoying as hell. Oooohhhh, he’s not reaaaaally the captain. What are you doing now. . . Stop that. STOP IT NOW. Ending with; that’s how you stopped a war with the Klingons. Who were winning at the time. 🙄

Watched Season Two up to the episode where they found that earth colony and EVERYONE misunderstands the Prime Directive. Now they’re doing Section 31. Deep Space Nine did it beautifully. Discovery doesn’t have the talent to lick an envelope and seal it properly.

I feel the same way, but I’m a sucker for Star Trek! It is new Star Trek and I enjoy that at least. But if this season continues this trend I’m going to cancel my CBSAA service and then re-subscribe when the Picard show comes on. But I will not subscribe for season three at this point.

…but how did ‘Control’ confine Leland

(section 31) to the chair?

‘Control’ is no longer corporeal and has to take form using holograms now that Airiam is dead.

doesn’t anybody see a Borg like resemblance in the way he was injected with nanites

Well, I have to wonder if this is a new type of tie-in with the STAR TREK – SECTION 31 novel “CONTROL” – featuring Deep Space Nine’s Dr. Bashir on the cover. That story, and others along this thread, all feature THE SAME title of the AI which literally controls Section 31 in the novels. (Did the ST:DIS producers team up with author David Mack concerning this parallel thread? It seems so.) There’s a terrifying end to the S-31 novels featuring “CONTROL” as the key…. I won’t spoil it for those who haven’t read the book, but it is EXACTLY what is being shown in the series…….. Interesting isn’t the word for this!

I guess I have to play Star Trek Historian (i.e.: Captain Obvious). This is the story of how Control (an AI program for threat assessment) some how, over time, became mechanized, roboticized, and in over 500 years becomes a hyper intelligent race of androids that sees no merit in the warring factions of corporial beings, and so destroys them. The Red Angel goes forwards in time finds this mess and tries to stop it. Control tries to protect itself, and follows the Angel to each past point in time to inject and update its code from that time, which come through in the form of Signals and possibly self replicating nanobots. Control, in an attempt of self preservation, decides it needs to emulate corporials better to become the evil among us and also so it can get the newly found information from the Orb it sees as useful, it injects the nanobots into Leland turning him into the first Borg. And there lies the point of the whole storyline, it is the story of how the Federation created the Borg.

I told you I was roleplaying from the beginning, Captain Obvious. Oh, and by the way, You all need to see the other 6 Star Trek series for perspective. This isn’t about Collosus, the HAL 9000, or any other stories or movies. This is everything to do with Star Trek. IT IS THE BORG!!!!! (1st introduced in Star Trek: The Next Generation) You all should be playing Star Trek Online-free to play! VADM JT Kerry-www.fleet7.com